BACKGROUND

We humans hear frequency logarithmically to the base 2. That means each higher octave is a doubling of the frequency. For example, the difference between 20 Hz and 40 Hz, sounds the same to the human ear as the difference between 4000 Hz and 8000 Hz. Even though the latter 2 notes are much further apart in absolute terms, both are exactly one octave apart to human perception.

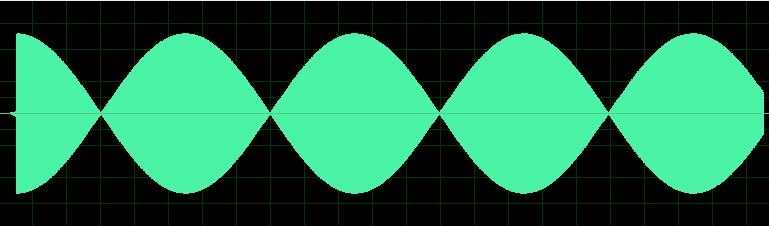

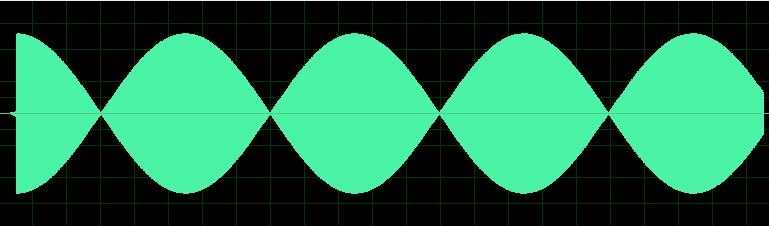

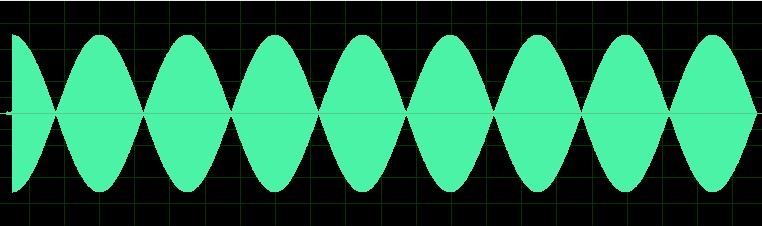

When 2 different frequencies sound, we hear the net resulting waveform of one superimposed on the other. If they are far apart then we perceive them as 2 distinct tones. But if they are close enough together then we perceive them as a single tone with "beat" pulses in it. For example, if we hear A=440 and A=443 at the same time, then we hear an "A" that pulses 3 times per second. The rate of the pulsing is the difference between the two tones.

How close together do they have to be to create audible beats? Most references say within something like 10 Hz. Beyond that the beats are perceived differently as a dissonant noise. When the tones are even further apart than that, the difference in frequency itself becomes an audible frequency and may be perceived as a separate tone. This is called a difference tone, and I may eventually extend this example to include that. But for now it's beyond the scope of this example. Here we focus on the audibility of beats.

INTONATION AUDIBILITY

So the question is, when does intonation error become perceptible in music?

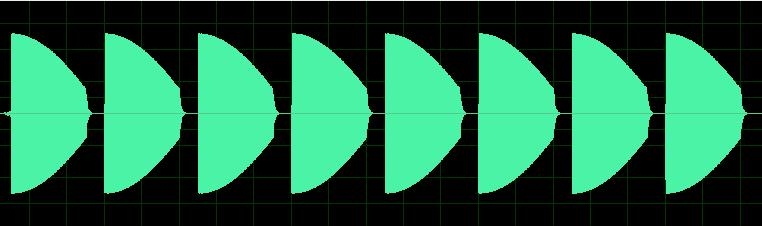

I believe that with most people, it becomes perceptible only when the notes are longer than the beats.

That is, when any individual note contains more than 1 beat.

In other words, if each individual note ends quickly so that a beat never pulses,

then there are no beats and we perceive the notes as "in tune".

This means the perception of intonation is a bit more complex than simply hearing that tones have different frequencies.

It would depend not only on how far apart the tones are,

but also what octave they are in,

and also how fast the notes are moving.

This example demonstrate this idea over a 2 octave range:

at 500 Hz, at 1000 Hz, and at 2000 Hz.

We start with a difference of 2 Hz at 500, which becomes 4 Hz at 1000 and 8 Hz at 2000.

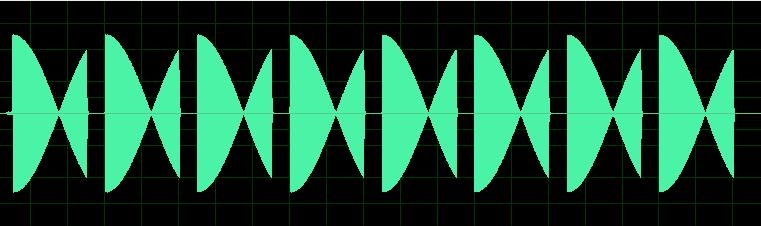

FIRST OCTAVE

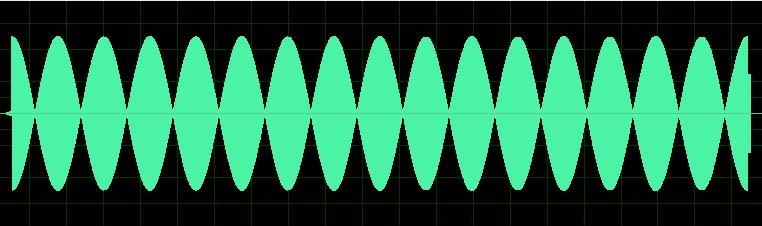

SECOND OCTAVE

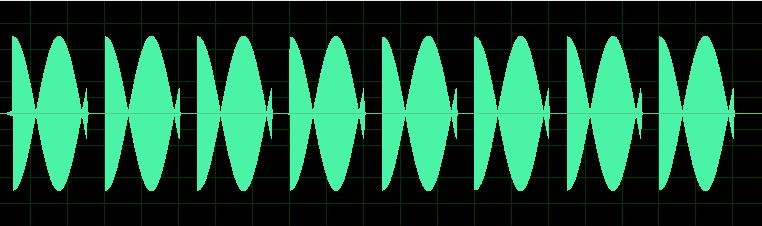

THIRD OCTAVE

I make the following observations:

Some predictions this experiment suggests, but doesn't scientifically prove:

The net result is that an intonation error that is inaudible at 500 Hz, becomes audible one octave higher and obvious two octaves higher. Thus, intonation does indeed get more demanding as pitch rises because the human ear becomes more sensitive to intonation differences at high frequencies. When piccolo, flute and violin (and to some extent oboe) players are off in their intonation, the results will be more easily heard by most listeners, and it will be perceived as more "bad" sounding or dissonant compared to the same intonation error in the lower pitched instruments.

Of course all musicians should strive to play in tune. The point is not that the the lower instruments don't have to be in tune, but that the results of intonation problems in higher pitched instruments are more apparent and perhaps more dissonant to the listener.

NOTE: I don't know whether this experiment has anything to do with absolute pitch perception. Perhaps those with absolute pitch can hear the two tones are out of tune even when the notes are quicker than the beats. Then again, perhaps not - it's an interesting question. Several people have listened to these samples and reported similar perceptions to my own. But none of them have had (or claimed to have) absolute pitch perception.