Blind Testing: Definitions

The goal of a blind audio test is to differentiate two sounds by listening alone with no other clues. Eliminating other clues ensures that any differences detected were due to sound alone and not to other factors.

A blind audio test (also called A/B) is one in which the person listening to the sounds A and B doesn’t know which is which. It may involve a person conducting the test who does know.

A double-blind audio test (also called A/B/X) is one in which neither the person listening, nor the person conducting the test, knows which is which.

In a blind test, it is possible for the test conductor to give clues or “tells” to the listener, whether directly or indirectly, knowingly or unknowingly. A double-blind test eliminates this possibility.

What is the Point?

The reason we do blind testing is because our listening/hearing perception is affected by other factors. Sighted listening, expectation bias, framing bias, etc. This is often subconscious. Blind testing eliminates these factors to tell us what we are actually hearing.

The goal of an A/B/X test is to differentiate two sounds by listening alone with no other clues. Key word: differentiate.

- A blind test does not indicate preference.

- A blind test does not indicate which is “better” or “worse”.

Most people — especially audio objectivists — would say that if you pass the test, then you can hear the difference between the sounds. And if don’t, then you can’t. Alas, it is not that simple.

- If you pass the test, it doesn’t necessarily mean you can hear the difference.

- You could get lucky: a false positive.

- If you fail the test, it doesn’t necessarily mean you can’t hear the difference.

- You might tell them apart better than random guessing, but not often enough to meet the test threshold: a false negative.

- If you can hear the difference, it doesn’t necessarily mean you’ll pass the test.

- False negative, like case (2).

- If you can’t hear the difference, it doesn’t necessarily mean you’ll fail the test.

- False positive, like case(1).

Simply put, the odds are that if you pass the test, you can hear a difference, and if you fail, you can’t. But exceptions to this rule do happen, how frequently depends on the test conditions. Even a blind squirrel sometimes finds a nut!

Hearing is Unique

Hearing is quite different from touch or sight in an important way that is critical to blind audio testing. If I gave you two similar objects and asked you to tell whether they are exactly identical, you can perceive and compare them both simultaneously. That is, you can view or touch both of them at the same time. But not with sound! If I gave you two audio recordings, you can’t listen to both simultaneously. You have to alternate back and forth, listening to one, then the other. In each case, you compare what you are actually hearing now, with your memory of what you were hearing a moment ago.

In short: audio testing requires an act of memory. Comparing 2 objects by sight and touch can be done with direct perception alone. But comparing 2 sounds requires both perception and memory.

Audio objectivists raise a common objection: “But surely, this makes no difference. It only requires a few seconds of short-term memory, which is near perfect.” This sounds reasonable, but evidence proves it wrong. In A/B/X testing, sensitivity is critically dependent on fast switching. Switching delays as short as 1/10 second reduce sensitivity, meaning it masks differences that are reliably detected with instantaneous switching. This shows that our echoic memory is quite poor. Instantaneous switching improves sensitivity, but it still requires an act of memory because even with instant switching you are still comparing what you are actually hearing, with your memory of what you were hearing a moment before.

This leaves us with the conundrum that the perceptual acuity of our hearing is better than our memory of it. We can’t always remember or articulate what we are hearing. Here, audio objectivists raise a common objection: “If you can’t articulate or remember the differences you hear, then how can they matter? They’re irrelevant.” Yet we know from numerous studies in psychology that perceptions we can’t articulate or remember can still affect us subconsciously — for example subliminal advertising. Thus it is plausible that we hear differences we can’t articulate or remember, and yet they still affect us.

If this seems overly abstract or metaphysical, relax. It plays no role in the rest of this discussion, which is about statistics and confidence.

Accuracy, Precision, Recall

More definitions:

A false positive means the test said the listener could tell them apart, but he actually could not (maybe he was guessing, or just got lucky). Also called a Type I error.

A false negative means the test said the listener could not tell them apart, but he actually can (maybe he got tired or distracted). Also called a Type II error.

Accuracy is what % of the trials the listener got right. An accurate test is one that is rarely wrong.

Precision is what % of the test positives are true positives. High precision means the test doesn’t generate false positives (or does so only rarely). Also called specificity.

Recall is what % of the true positives pass the test. High recall means the test doesn’t generate false negatives (or does so only rarely). Also called sensitivity.

With these definitions, we can see that a test having high accuracy can have low precision (all its errors are false positives) or low recall (all its errors are false negatives), or it can have balanced precision and recall (its errors are a mix of false positives & negatives).

Computing Confidence

A blind audio test is typically a series of trials, in each of which the listener differentiates two sounds, A and B. Given that he got K out of N trials correct, and each trial has 2 choices (X is A or X is B), what is the probability that he could get that many correct by random guessing? Confidence is the inverse of that probability. For example, if the likelihood of guessing is 5% then confidence is 95%.

Confidence Formula

p = probability to guess right (1/2 or 50%)

n = # of trials – total

k = # of trials – successful

The formula:

(n choose k) * p^k * (1-p)^(n-k)

This gives the probability that random guessing would get exactly K of N trials correct. But since p = 1/2, (1-p) also = 1/2. So the formula can be simplified:

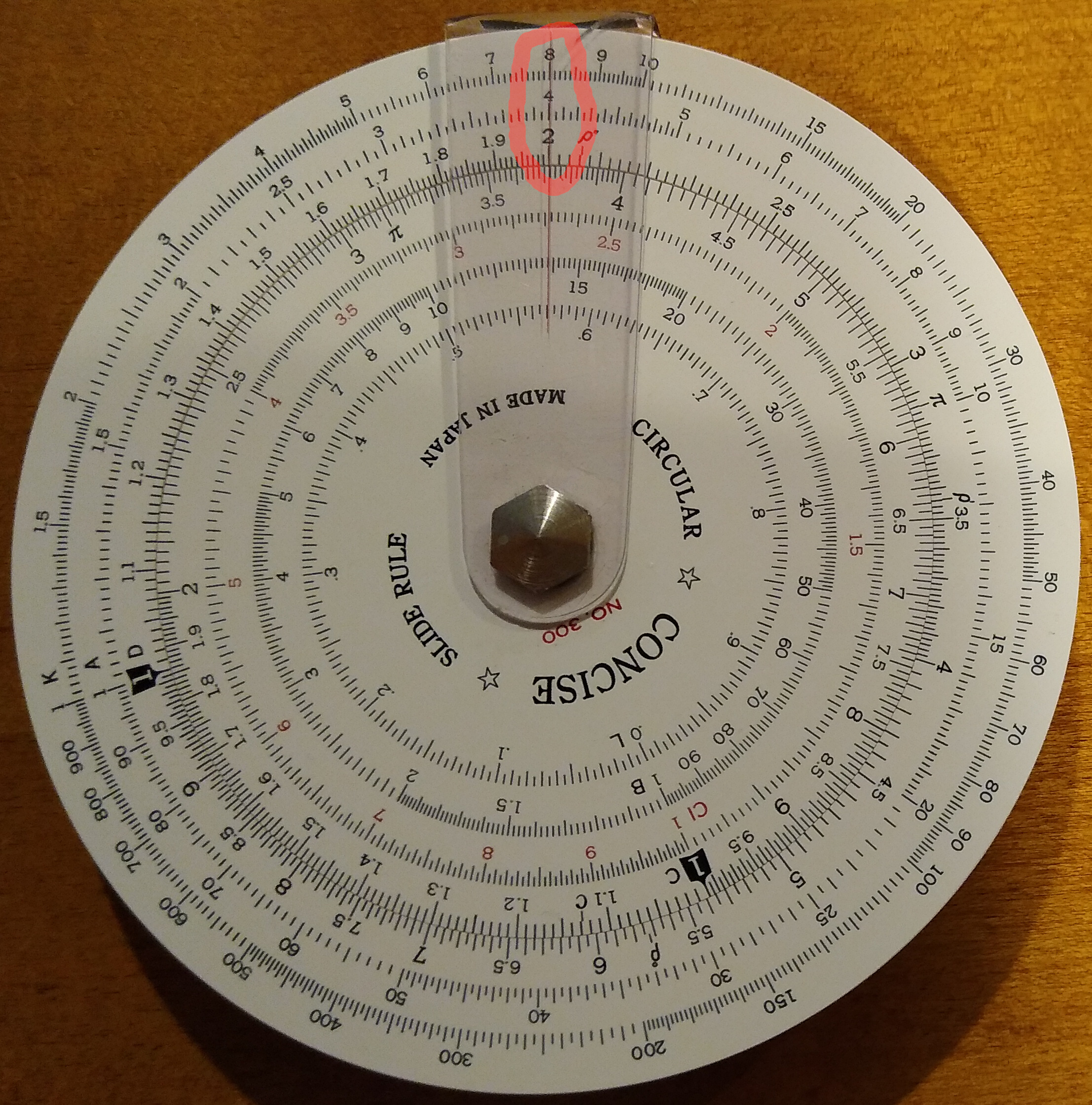

(n choose k) * p^n

Now, substituting for (n choose k), we have:

(n! * p^n) / (k! * (n-k)!)

However, this formula doesn’t give the % likelihood to pass the test by guessing. To get that, we must add them up.

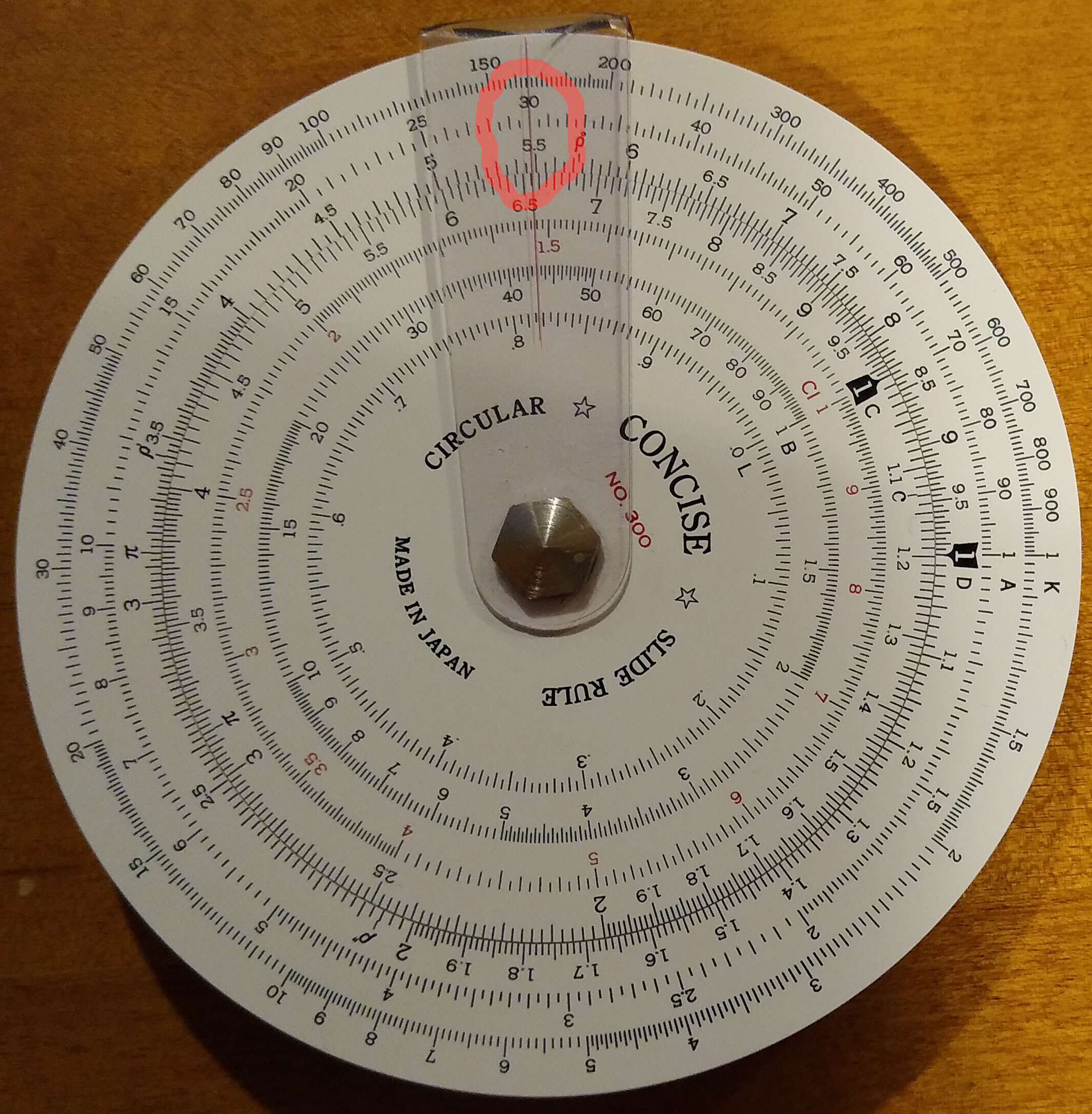

For example, consider a test consisting of 8 trials using a decision threshold of 6 correct. To pass the test, one must get at least 6 right. That means scoring 6, 7 or 8. These scores are disjoint and mutually exclusive (each person gets a single score, so you can’t score both 6 and 7), so the probability of getting any of them is the sum of their individual probabilities. Use the above formula 3 times: to compute the probabilities for 6, then 7, then 8. Then sum these 3 numbers. That is the probability that someone will pass the test by randomly guessing to reach our decision threshold of 6. Put differently: how often people who are guessing will get at least 6 right.

Now you can do a little homework by plugging into this formula:

- 4 trials all correct is 93.8% confidence.

- 5 trials all correct is 96.9% confidence.

- 7 correct out of 8 trials (1 mistake) is 96.5% confidence.

The Heisen-Sound Uncertainty Principle

A blind audio test cannot be high precision and high recall at the same time.

Proof: the tradeoff between precision & recall is defined by the test’s confidence threshold. Clearly, we always set that threshold greater than 50%, otherwise the results are no better than random guessing. But how much more than 50% should we set it?

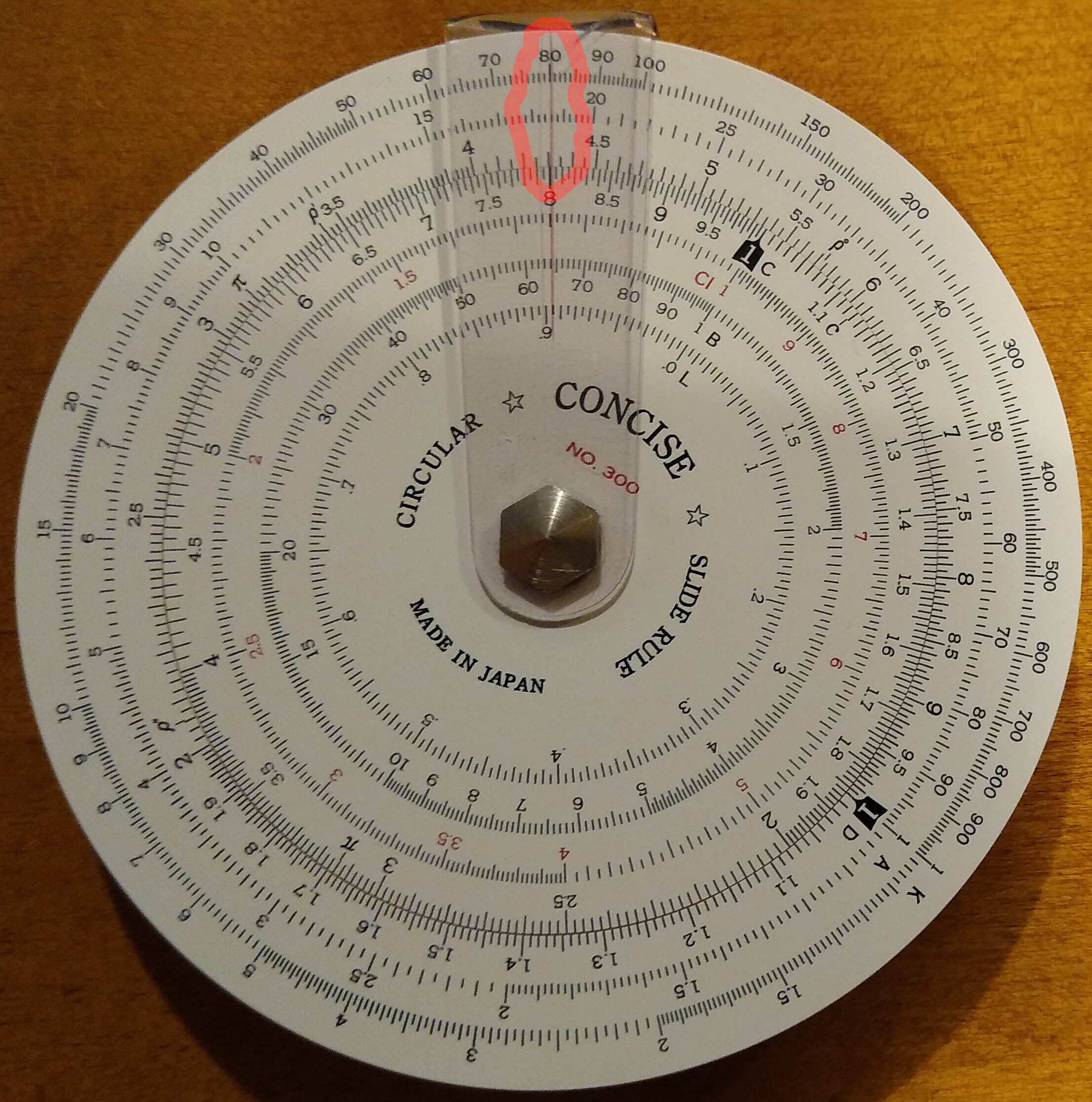

At first, intuition says to set it as high as possible. 95% is often used to validate statistical studies in a variety of fields (P-test at 5%). From the above definitions, the test’s confidence percentile is its precision, so we have only 5% chance for a false positive. That means we are ignoring (considering invalid) all tests with scores below 95%. For example, somebody scoring 80% on the test is considered invalid; we assume he couldn’t hear the difference. But he did better than random guessing! That means he’s more likely than not to have heard a difference, but it didn’t reach our high threshold for confidence. So clearly, with a 95% threshold there will be some people who did hear a difference for whom our tests falsely says they didn’t. Put differently, at 95% (or higher) we are likely to get some false negatives.

The only way to reduce these false negatives is to lower our confidence. The extreme case is to set confidence at 51% (or anything > 50%). Now we’ll give credit to the above fellow who scored 80% on the test. And a lot of other people. Yet this is our new problem. In reducing false negatives, we’ve increased false positives. Now someone who scores 51% on the test is considered valid, even though his score is low enough he could easily have been guessing.

The bottom line: the test will always have false positives and negatives. Reducing one increases the other.

Confidence vs. Raw Score

We said this above but it’s important to emphasize that confidence is not the same as raw test score. From the above, 7 of 8 is 96.5% confidence, yet 7/8 = 87.5%. In this case the raw score is 87.5% but the confidence is 96.5%.

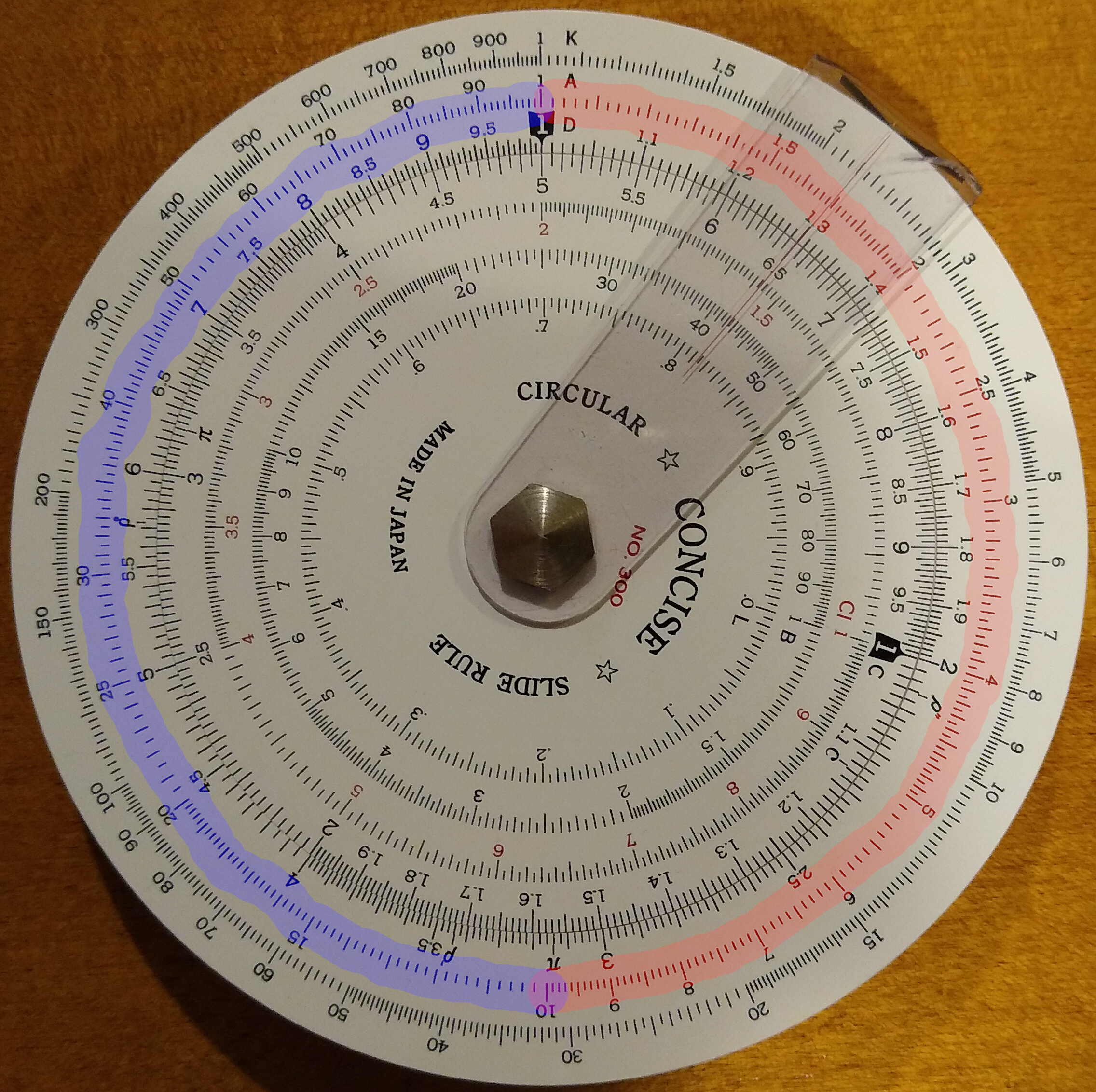

If you get 60% of the trials correct, your confidence may be higher or lower than 60%. It depends on how many trials you did. The more trials you did, the more confident the 60% score becomes. For example, 3 of 5 is only 50% confidence; 6 of 10 is 62.3%; 12 of 20 is 74.8%. Getting 60% of the trials correct, you reach 95% confidence at 48 of 80, which is 95.4% confident.

The intuition behind this is that if you are doing only slightly better than guessing, consistency (more trials) is what separates random flukes from actual performance. If you flip a coin 6 times, you may frequently get 4 heads. But if you flip a coin 600 times, you will almost never get 400 heads. Put differently, you can sometimes win in Vegas, but you can’t consistently win else it would still be a desert.

Problem is, we’re limited in how many trials we can do. Listener fatigue sets in after 10 to 20 trials, skewing the results. You must take a break, relax the ears before continuing. So to get high sensitivity/recall from ABX testing requires multiple tests, in order to get high confidence from marginal raw scores.

Optimal Confidence

The ideal confidence threshold is whatever serves our test purposes. Higher is not always better. It depends on what we are testing, and why. Do we need high precision, or high recall? Two opposite extreme cases illustrate this:

High precision: 99% confidence

We want to know what audio artifacts are audible beyond any doubt.

Use case: We’re designing equipment to be as cheap as possible and don’t want to waste money making it more transparent than it has to be. It has to be at least good enough to eliminate the most obvious audible flaws and we’re willing to accept that it might not be entirely transparent to all listeners.

Use case: We’re debunking audio-fools and the burden of proof is on them to prove beyond any doubt that they really are hearing what they claim. We’re willing to accept that some might actually be hearing differences but can’t prove it (false negatives).

High recall: 75% confidence

We want to detect the minimum thresholds of hearing: what is the smallest difference that is likely to be audible?

Use case: We’re designing state-of-the-art equipment. We’re willing to over-engineer it if necessary to achieve that, but we don’t want to over-engineer it more than justified by testing probabilities.

Use case: Audio-fools are proving that they really can hear what they claim, and the burden of proof is on us to prove they can’t hear what they claim. We’re willing to accept that some might not actually be hearing the differences, as long as the probabilities are on their side however slightly (false positives).

Why wouldn’t we use 51% confidence? Theoretically we could. But there’s so much noise, our results become statistically meaningless. Using 75% reduces the noise (or false positives) while still recognizing raw scores only slightly better than random guessing, and using more trials to reduce false positives. For example, if our threshold raw score is 60%, we achieve 75% confidence at 15 of 25.

Conclusion

To mis-quote Churchill, “Blind testing is the worst form of audio testing, except for all the others.” Blind testing is an essential tool for audio engineering from hardware to software and other applications. For just one example, it’s played a crucial role in developing high quality codecs delivering the highest possible perceptual audio quality with the least bandwidth.

But blind testing is not perfectly sensitive, nor specific. It is easy to do it wrong and invalidate the results (not level matching, not choosing appropriate source material, ignoring listener training & fatigue). Even when done right it always has false positives or false negatives, usually both. When performing blind testing we must keep our goals in mind to select appropriate confidence thresholds (higher is not always better). High precision or specificity can be achieved in a single test, but high recall or sensitivity requires aggregating results across multiple tests.